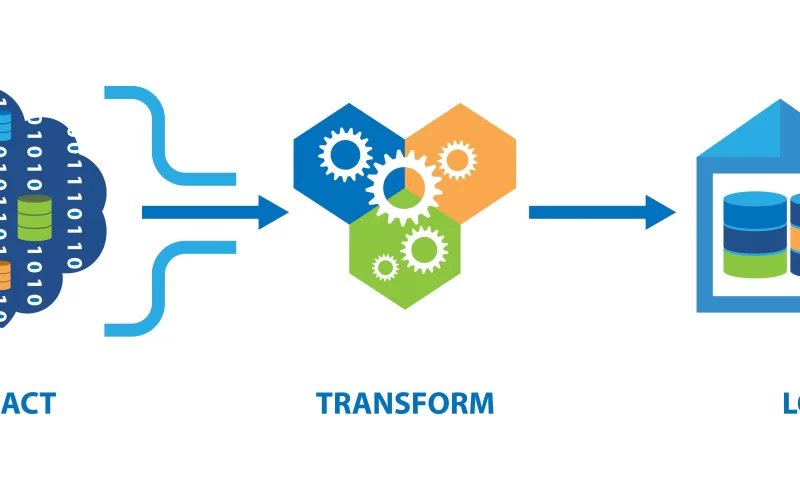

ETL a three-step (extract, transform, load) data integration process used to collect data from multiple sources. The method collects information from the source system, which is then translated into a format that can be processed and stored in a data warehouse or any other structure.

As organisations began using multiple databases to store different types of business information, ETL captures its popularity. The need for the convergence of widespread data through different databases creates space for ETL. Throughout data science, IT becomes the standard technique for different data processing systems.

What is ETL?

ETL is short for (Extract, Transform and Load), three different database functions that are blended into one tool to extract data from one database and place it in another database. In today’s world the concept of data warehousing is named as E-MPAC-TL or Extract, Monitor, Profile, Analyze, Clean, Transform, and Load. Or, ETL focus on Data Quality and MetaData.

Extracting

When we look at Extracting in-depth the main aim is to collect data from the source system as quickly as possible and to make it less difficult for source systems. This also specifies that, based on the situation, the most applicable extraction method must be chosen for source date/time stamps, database log tables and hybrids.

Transform & Loading

Transform & Loading Data which is about integrating and finally moving integrated data into the application area that can be accessed by the end-user community through front-end tools. Here, the focus should be on making the most efficient use of the provided features of the chosen ETL-tool. It’s not enough to use the ETL method in medium to large-scale data warehouse environment, it is necessary to optimize data as much as possible instead of customizing it. ETL will reduce the utilization time of the different sources to aim for development activities that comprise the majority of the conventional ETL effort.

Monitoring

Data monitoring enables data verification, which is carried out throughout the whole ETL process and has two main objectives. First, the data should be inspected. There must be a proper balance between checking the incoming data as much as possible and not slowing down the entire ETL system when there is too much checking. The inside-out method used in the Ralph Kimbal testing technique might be used here. By using this technique helps to capture all errors uniformly based on a predefined collection of metadata business rules and enables them to be tracked through a simple star schema that allows a view of data quality evolution over time. Secondly, close attention should be paid to the quality of the ETL system, i.e. the collection of the appropriate functional metadata information. This metadata information includes, start and end timings for ETL-processes on different layers (overall, by stage/sublevel & by person ETL-mapping / work).

What’s the procedure for the ETL?

Extract, Transform, Load (ETL), an automated process that will take raw data, extracts the information necessary for evaluation and transforms this into a layout that can serve business requirements and loads this into a data warehouse. ETL generally collects details to reduce its size and enhance performance for different types of analysis.

While building an ETL infrastructure, proper plan and test must be done before you integrate data sources. Let’s look at how we can build the ETL infrastructure more effectively.

Understand the organizational needs

First, determine whether to develop or buy ETL architecture based on your needs. Buying will be the right choice if you collect data from some standard sources for your company. But if you need to determine a few things based on location data from different sources, you need to make a custom one. Therefore, based on the proper analysis of the Data Sources, Usage, Latency, you can perfectly understand the organizational requirements.

Data source Auditing

Constructing a data source profile can help you move the architecture process to the next level. You need to understand the production databases that drive your business, as well as the diverse data sets. By doing so, we can easily understand the top priority of the organization, and by defining them, planning can be better implemented

Data extraction

The organization determines the data source fields to be extracted during the data extraction process. Data processing is commonly done in one of three ways.

The first approach is Update notification which is the simplest way to extract information from source systems. The system will automatically alert you when the record changes.

The second approach is Incremental Extraction if a system fails to update the notification but can easily identify the records that have been changed. The process needs to be identified and distributed during subsequent ETL phases.

Full extraction is the third method which holds a copy of the last extraction so that it can be easily checked to identify the current one. The high data transfer rate makes it less common and is only suitable for small tables.

Data Transform

Using the Transform section in ETL we can explore how data warehouse processing has impacted the transformation stage. Traditional ETL systems leave the majority of the transformations to the analytical stage and instead concentrate on loading minimally processed information into the warehouse. It increases the versatility of your ETL system and allows your experts more influence over data modeling.

When loading data into your warehouse, some commonly occurring situations should be handled: It includes Restructuring data, Data typing, and Data schema evolution

The final stage of ETL architecture

The information you provide to your institution must be made available quickly and precisely so that the processes you introduce here can ensure accuracy and create organizational trust in your data.

Job scheduling is the first thing we should do in. It could reduce the load on your internal systems by scheduling.Through monitoring of your system, failures can be easily escalated and can alert the team to take action immediately. IT helps to combine this with the other monitoring and alerting systems that you use for other aspects of your software IT infrastructure support.By using proper Recovery, ETL Testing & Security demands to take into account for securely transfer data as well.